Nura

At Nuralogix, I developed a groundbreaking medical app that measures vitals using only the iPhone camera. This first-of-its-kind technology combines AI/ML with iOS to accurately read heart rate, blood pressure, and stress levels.

3

Vital Signs Measured

First

Camera-based Medical App

Major Contributions

- Reading the Heart Rate, Blood Pressure, and Stress

- I worked alongside the Machine Learning team to build a service that allowed us to consume data from the iOS device. The device sent statistical data from video feed of the camera to the server using a websocket to allow the inference of the 3 vitals above.

- I implemented the client side feature extraction process on the device side to allow inference to work efficiently on the server.

- I built and designed the main page where the vitals were displayed to the user.

- Application Design Documentation, Agile Process, and CI/CD

- I gathered and implemented requirements necessary to help with the development of the hardware device. I documented various image feature extraction pipelines and functions required to be ported over into C++ and built into libraries.

- I guided and implemented an agile process that was adopted by other members of the software team applications team.

- I integrated fastlane and jenkins to create a build process to allow the application to be delivered to stakeholders.

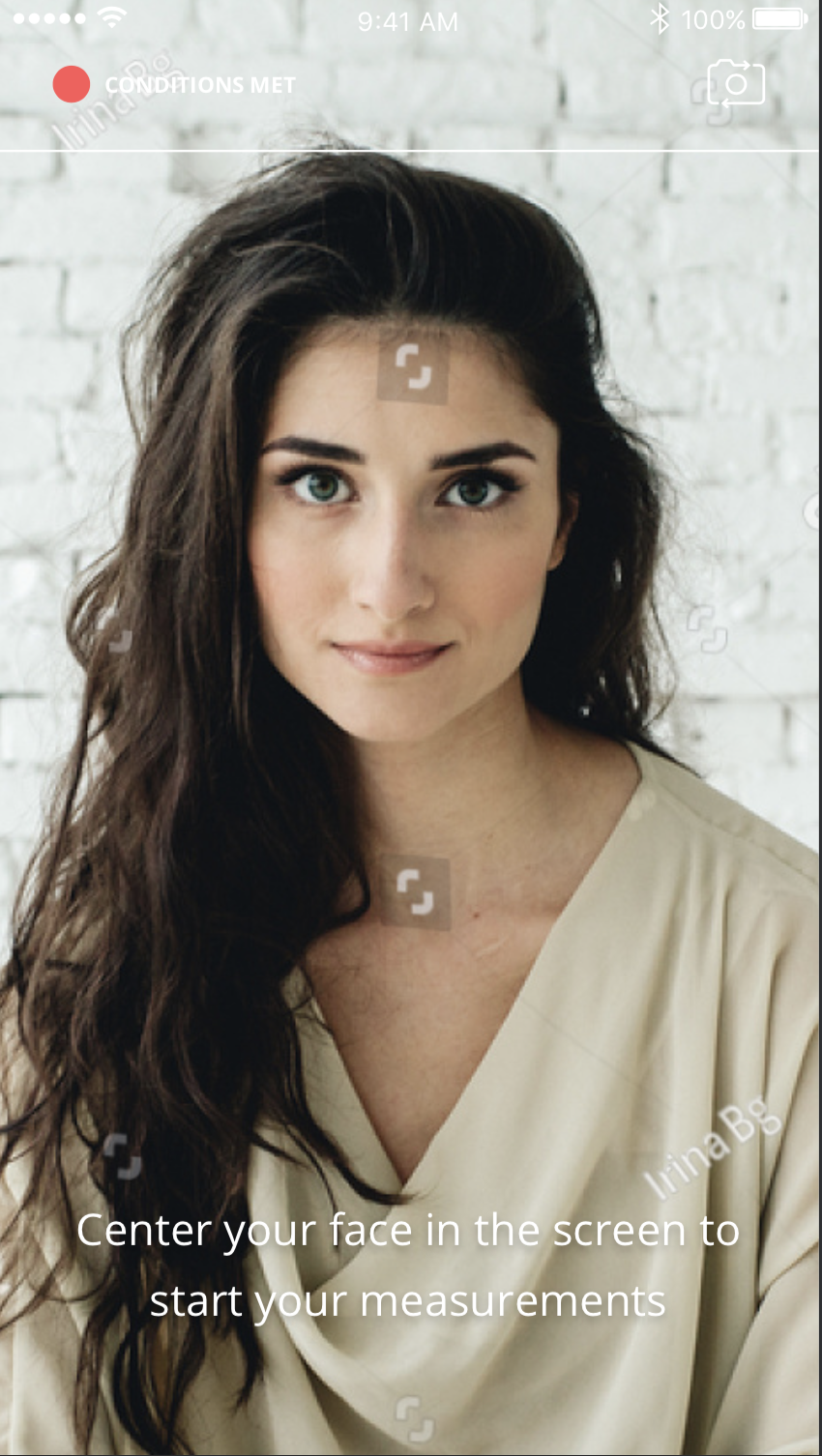

- Designed and implemented the UI and trained DLib model to track a face model and features of the face

- I worked with an outside art team to implement the onboarding screens for Nura, and implemented the screens using Lotte

- I trained and implemented the mobile DLib model to capture fiducial points on the face and draw regions of interests.

App Screenshots